ChatGPT Plugins from Third Parties Might Facilitate Account Takeovers.

Cybersecurity researchers have discovered that threat actors seeking illicit access to sensitive data may exploit third-party modules for OpenAI ChatGPT as a new attack surface.

Security vulnerabilities discovered in ChatGPT and its ecosystem, according to new research published by Salt Labs, could enable attackers to install malicious modules without users’ consent and take control of third-party websites such as GitHub.

ChatGPT plugins, as their nomenclature suggests, are applications developed to operate on the large language model (LLM) in order to retrieve current information, execute computations, or connect to external services.

Dover Harbour Board and Kent Police are reprimanded by the ICO for sharing information.

Since then, OpenAI has also introduced GPTs, which are customized iterations of ChatGPT designed for particular use cases and reduce reliance on third-party services. Users of ChatGPT will no longer have the capability to install additional plugins or initiate conversations using pre-existing plugins as of March 19, 2024.

One of the vulnerabilities discovered by Salt Labs pertains to the manipulation of the OAuth workflow in order to deceive a user into installing an arbitrary plugin. This is achieved by capitalizing on the lack of validation by ChatGPT that the user initiated the plugin installation.

This could potentially enable malicious actors to intercept and exfiltrate any data that the victim discloses, including any confidential information.

Additionally, the cybersecurity firm discovered vulnerabilities in PluginLab that could be exploited by malicious actors to execute zero-click account hijacking attacks—thereby gaining access to the source code repositories of an organization and gaining control of its account on third-party platforms such as GitHub.

“‘auth.pluginlab[.]ai/oauth/authorized’ does not authenticate the request, which means that the attacker can insert another memberId (aka the victim) and get a code that represents the victim,” Aviad Carmel, a security researcher, explained. “With that code, he can use ChatGPT and access the GitHub of the victim.”

The acquisition of the victim’s memberId is possible through a query to the endpoint “auth.pluginlab[.]ai/members/requestMagicEmailCode.” No indications exist that the vulnerability has been exploited to compromise any user data.

An additional vulnerability identified in multiple plugins, including Kesem AI, is an OAuth redirection manipulation flaw. By transmitting a specially crafted link to the target, an attacker could potentially capture the account credentials associated with the plugin itself.

This development occurs several weeks after Imperva disclosed two ChatGPT cross-site scripting (XSS) vulnerabilities that, if exploited in tandem, could compromise an account.

Security researcher Johann Rehberger showcased in December 2023 how malicious actors could construct bespoke GPTs capable of phishing for user credentials and subsequently transferring the pilfered data to an external server.

A Novel Attack for Remote Keylogging on AI Assistants

Additionally, the results are consistent with recently published research this week concerning an LLM side-channel attack that clandestinely extracts encrypted responses from AI Assistants via the web by utilizing token-length.

“LLMs generate and transmit responses as a series of tokens (similar to words), with each token being transmitted as it is generated from the server to the user,” according to a group of scholars from Offensive AI Research Lab and Ben-Gurion University.

“Although this process remains encrypted, the sequential transmission of tokens reveals an additional side-channel known as the token-length side-channel.” Notwithstanding the implementation of encryption, the magnitude of the packets may disclose the extent of the tokens, thereby potentially enabling network adversaries to deduce sensitive and confidential data exchanged in private conversations with AI assistants.

Craig Wright is not the inventor of bitcoin, a UK magistrate rules.

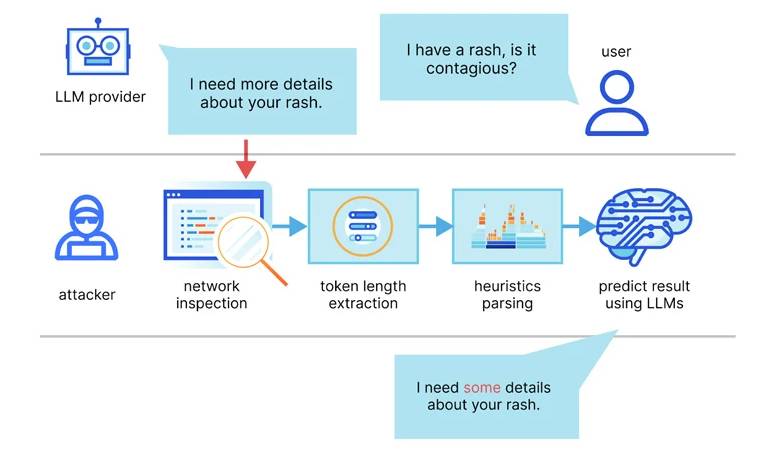

This is achieved through the utilization of a token inference attack, which trains an LLM model capable of translating token-length sequences into their plaintext counterparts in natural language. The objective of this attack is to decipher responses in encrypted traffic.

Alternatively stated, the fundamental concept entails intercepting real-time conversation responses through the utilization of an LLM provider, deducing the length of each token from the network packet headers, extracting and parsing text segments, and employing the custom LLM to deduce the response.

An AI conversation client operating in streaming mode and an adversary capable of capturing network traffic between the client and the AI chatbot are two essential prerequisites for executing the attack.

In order to mitigate the side-channel attack’s impact, it is advisable for AI assistant development firms to implement random padding to obscure the true length of tokens, transmit tokens in larger clusters as opposed to individually, and dispatch complete responses simultaneously as opposed to token-by-token.

“Balancing security with usability and performance presents a complex challenge that requires careful consideration,” the investigators concluded.